AI Update #18 - AI as in Apple Intelligence, AGI maybe yes maybe not and Jensen

Finding a balance on the Cloud's Edge

Parents: this is an R-rated edition, hide the kids!

Kids: this is an R-rated edition, hide from your parents! (or hide your parents)

AIs: 0100000101101100011000100110010101110010011101000110111100100000011100110111010101100010011011010110100101110100011100110010000001110100011011110010000001111001011011110111010100100000011101010110111001100011011011110110111001100100011010010111010001101001011011110110111001100001011011000110110001111001

I decided to dedicate most of this update to a relatively deep analysis of Apple’s latest announcement. Not because of any ground-breaking innovation, but because it’s the first company deploying at scale a partially-private AI ecosystem. This is indeed a major development as it shows what’s possible, and to what extent, with a “need to access” restriction over sensitive and private information.

Redefining AI

And I mean, redefining the acronym not the technology.

Apple announced Apple Intelligence and it took many by surprise when they also confirmed their partnership with OpenAI. One of the key messages Apple has been pushing for the past few years has been that of privacy, which clashes head-on with whatever message OpenAI has been pushing since 2022.

Personally, the surprise came from the fact that Apple was unable to develop the same technology internally, despite rolling out an integration that was anything but fast.

I do have questions, and I’ll also attempt to answer them: was this decision taken because they were afraid to make a blunder like Google with Gemini? Or was it because they couldn’t achieve SOTA and they didn’t want to accumulate further delays? After all iPhone sales have been declining rapidly in 2024, with Samsung capturing market share and moving to #1 after the launch of the Samsung S24. Which, mind you, is sold as an AI phone.

OpenAI hasn’t really won the hearts of the masses, this move by Apple left many concerned and the general mood has been well summarised by the King of Memes (aka Elon).

But there’s definitely more than meets the eye and OpenAI is probably the smallest piece in this puzzle. We also anticipated, in April, that Siri was soon going to get a much needed brain-lift, this has now been confirmed under the new Intelligence framework, let’s dig through.

The Small, the Big, the Biggest and the Diffuser

Apple had to put a lot of effort to keep its promise of privacy, and that resulted in the development of a chain of models and workflows with different level of privacy and data segregation. Most notably the new Intelligence architecture is made of:

A small model that runs on device

A bigger one that runs on Apple’s Private Cloud Compute (PCC)

ChatGPT, integrated into Siri and Writing Tools

A diffusion model that runs on device for image creation

The ecosystem also includes:

A small local model integrated in XCode

A big cloud model for code writing

Guided by a principle of necessary transparency, Apple made available some details about the internals of this new architecture, so we won’t have to divine (too much) to figure out what happens behind the stage.

The Small Model

The highest level of privacy is offered by running a local LLM, the model is itself a small (3B params) work of optimization art, in fact Apple had to think long and hard to make sure that the user experience would be good, and for that the model needs to be fast.

While the architecture hasn’t been disclosed, a few clues point of course to OpenELM, or at least a variant of it, as certain parameters (vocabulary) don’t match with those in the paper while others do (the size).

We can summarize the optimizations used to make this experience possible on edge devices (at least, iPhone 15 and above):

Attention: the model uses Grouped Query Attention (like LLama) as a fast attention mechanism

Shared Vocabulary: same weights for both input and output embeddings

Palletization: the model is quantized at an average of 3.5 bits-per-weight

LoRa/DoRa: Apple calls them Adapters, but whatever the term used, it’s a good idea to use LoRas to specialize the model on the fly for specific tasks

Caching/Power: a mix of techniques to improve caching and optimize the speed vs battery used

A lot of emphasis is put on Adapters, those are relatively small “patch” files (a few tens of megabytes) that can alter the model on the fly to increase performance on specific tasks: there are Adapters for summarization, proofreading, email replies etc… This allows Apple to ship a single and general model that can be specialized on-demand. Also, loading and unloading an adapter is faster and more space-efficient than loading and unloading a full model each time.

When a query is judged to be too complex to be reliably answered by the local model, it is sent to the larger remote model.

The Bigger Model

The bigger model runs directly on Apple Silicon servers, as mentioned above that happens on their Private Cloud Compute infrastructure.

Wait a moment… Apple Silicon, no Nvidia??

It’s no secret that Tim Cook is not the biggest Jensen fan (how you dare Tim!) so, to avoid depending on Nvidia, their supply chain and monopoly-level prices, they have decided to leverage - for inference - what they already had: a frankly amazing architecture!

What about training you ask? Training a full-scale LLM on Apple Silicon doesn’t seem the best use of anyone’s time. In fact Apple relied on Google TPUs for their models training. This means that, if they started working on this architecture last year, then they had to use Tensorflow instead of Torch (ouch!), which appears to have been confirmed.

I’m certain that developing an ecosystem for Apple Silicon wasn’t painless for the engineers, as they had to re-implement countless tools and frameworks that were already available for Nvidia GPUs, and such an ecosystem will take years to mature, even at Apple's scale.

This brings us to the next point: Apple has developed the capability to serve models at scale, but has not yet developed the ability to train full-scale LLMs internally, at least not on their own hardware.

Anyway, how big are the full-scale LLMs they’re offering? We can speculate that the models used are roughly similar in size to GPT-3.5 (let’s say 130-180B params), at least looking at the benchmarks, and this should be enough to provide relatively high-quality responses, without being too demanding in terms of compute.

The Biggest Model (ChatGPT)

When Siri is summoned from the bowels of Hell, it can request access to ChatGPT and if the user agrees, the query is handled by ChatGPT itself.

Let’s be clear, once ChatGPT is granted access, it will have access to all data required to fulfil the user’s request and that can be in the form of emails, messages, calendar, pictures and so on.

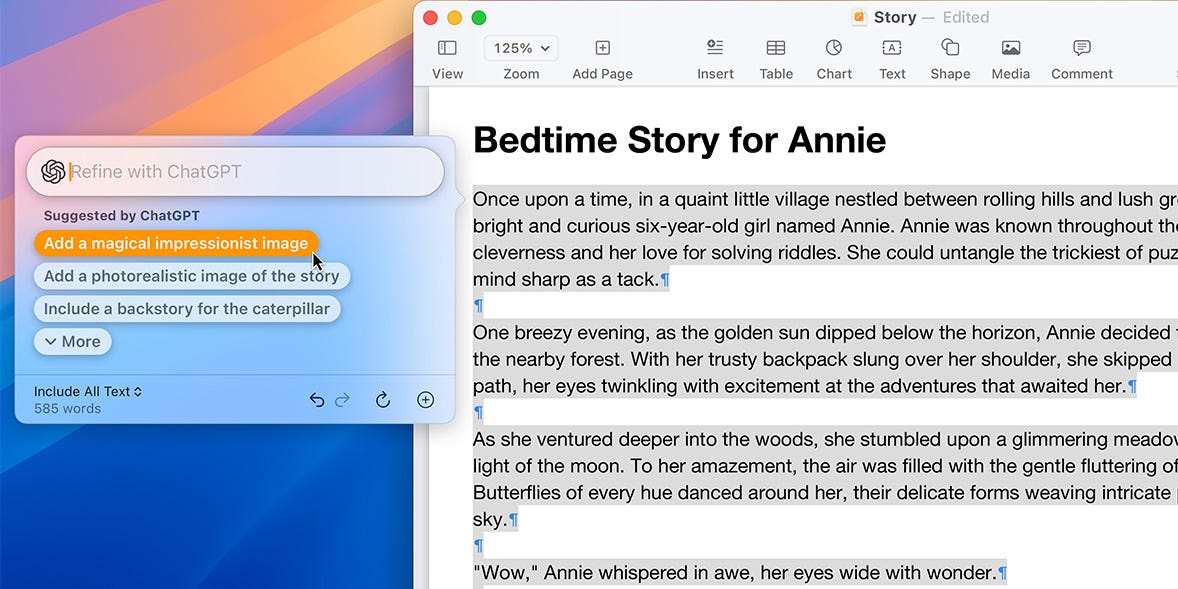

ChatGPT can be thought of as a third-level fallback for tasks - like planning I would imagine - where better reasoning capabilities are required, and where a smaller GPT-3.5 level model is not enough to offer a reliable answer. While it appears to be the primary mean of interaction for other apps, like Writing Tools.

Apple didn’t mention it directly, but they did show a demo of the new Siri with “semantic search” capabilities, this points without much doubt to a RAG system. We don’t know much about embeddings extraction, location and so on. Hopefully we’ll get more clarity since embeddings are as sensitive as the resource itself! Privacy aside, it would be interesting to know how these embeddings are used, if it’s to enrich the context of the local LLM, the remote one or all of them.

The Diffuser

It wouldn’t be a complete AI framework if it lacked image generation capabilities, and in fact Apple has introduced a series of capabilities that were not available before, like Genmoji (yes… GenAI Emoji) in different styles, and later on they will add the ability to easily alter pictures using speech or text: “change Jensen’s leather jacket into a space suit”.

About Privacy

Now that the architecture is clear, let’s take a step back and try to understand which channels effectively extract possibly sensitive or private information from an iDevice.

The small model appears to be safe from this point of view, in fact all processing is done locally, so I’m confident data stays as private as it gets.

The larger model runs on the PCC, which means it has to leave the device. The PCC is not, and cannot be, end-to-end encrypted. So we’ll have to trust Apple that our data is safe, not archived and not misused in other ways.

There are 2 sides to this coin, or it wouldn’t be a coin: the first is that Apple has taken extra steps to secure their infrastructure by design, making the system stateless and private. They even committed to providing production builds of the PCC software publicly for security audits. The second side is that we’ll have to trust Apple to do exactly what they claim, without changing course.

You might remember the iCloud CSAM Scanning controversy, after much push back from the public, Apple eventually took a step back and decided not to proceed with scanning customers’ images on iCloud. This new channel can potentially become a target of very similar lobbying, from various segments of the society to governments.

Then there’s ChatGPT: this one’s easy. You send data, OpenAI gets it. Pretty straightforward! Your level of privacy would be the same offered by the current OpenAI privacy policy, so comfort levels will vary depending from individual to individual.

An overhaul of Siri, and in general of the capabilities available on mobile devices, was very much needed. I have been using, for over a year, a series of apps to semantically search documents, find pictures based on content, run speech-to-text and in general to add a few quality-of-life improvements to a device that, so far, had very limited discovery capabilities.

Thankfully these tools will now be natively integrated in most smartphones and the level of privacy required will be decided by users. It’s nice to have the option. It’s also good that by-design data extraction and remote processing are kept to a reasonable minimum, as the vast majority of users won’t have any idea of what happens behind the scenes when GenAI is called to act.

It would be even better to have an option to turn off remote models altogether, but that might be provided at some later date.

How Does OpenAI Get Paid?

That’s a fair question, and the answer is: they won’t.

Tim Cook convinced Altman that taking ChatGPT, and thus the OpenAI brand, to the masses, is worth more than any money Apple could pay them. This might be true in the long run, but OpenAI still has to pay Microsoft to run ChatGPT on Azure.

Apple will certainly nudge iPhone users to convert to paid OpenAI users, if they opt frequently for ChatGPT, and in that transaction they’ll get their pound of flesh. The way this will unfold remains to be seen, though Apple has made it clear that ChatGPT is one possible choice, but others (Gemini) will soon join the ranks. Other arrangements will have to be found for the - very large - user base in China, where both Gemini and ChatGPT are banned.

In short, the modularity of the new Siri will provide the needed flexibility to avoid any form of “vendor lock-in” and leave to users the ability to choose the LLM they prefer (or those the government allows them to use). I hope Le Claude* will be made available too.

*Le Claude is the son I imagine if Claude and Le Chat (Mistral) were to have a baby LLM. He only speaks French.

Finally, Answering the Questions

We can now attempt to answer the questions I had.

Apple focused on creating a reliable on-device user experience and, no doubt, invested massively to develop their PCC infrastructure. While they might have succeeded (we still have to try the local models, after all) with edge processing, it looks like they didn’t have enough time - and very possibly the know-how - to develop a GPT-4 level LLM. The lack of dedicated hardware was very possibly another limiting factor, and so the choice went to OpenAI, in what seems a win-win situation for both companies.

Apple might still come up with a more sophisticated LLM in the next couple of years, but it doesn’t seem to be their focus right now. I have the impression that they prefer to leave LLMs to those having LLMs as their primary business, and this is a reasonable choice. Things will change as the hardware landscape evolves.

Users' voices will ultimately become the final decision factor in the Edge vs Cloud AI battle, but if Instagram, Facebook and TikTok have taught us anything, it is that the general population doesn’t seem to care a lot about privacy, so we might already have an answer to this one last doubt.

AGI… Might Not Be Behind the Corner

Chollet (AI researcher at Google) was recently interviewed about his views on LLMs and AGI, the interview is long but interesting and if you’ve time, you should watch it.

A very short clip published on X (give me Twitter back Elon, please) focuses on where the industry is going (or rather, not going):

The rush to LLMs has impacted AI in 2 major ways:

Frontier research is not being published anymore, everything is kept secret

Interest for LLMs virtually killed research in most other (essential) areas of AI

There is only one scenario where these 2 outcomes might play in a good way: if LLMs were the path to AGI. We discussed this option multiple times but at this stage, pretty much everyone agrees that while LLMs can be a path towards a better AI, they won’t become AGI by themselves.

Let me clarify this concept.

We all went crazy when ChatGPT was released to the public, I fell immediately in love with it. Then the ebb and flow of adjustments and new releases started. As we learned the tool and its limitations, our love for LLMs also evolved and the relationship got rocky. We were given a tool, then the tool was constrained, then we were given a little bit more capabilities and so on. But one fact remained: LLMs proved useful and the impact was real.

Companies like Fiverr and other gig platforms make for a good proxy of the impact of GenAI in the labour market. Fiverr estimated a positive impact of +4% due to AI. What happened exactly? According to them:

As we see a category mix shift from simple services such as translation and voiceover to more complex services such as mobile app development, eCommerce management, or financial consulting.

Do people still need translation and voice over? Of course they do, but those jobs have been taken over by GenAI and LLMs. Demand thus shifted towards higher skilled jobs, like app development. Why? Well, because of LLMs. Think about it, with LLMs a non-coder, or someone with minimal coding skills, can still strike a gig on one of those platforms and deliver good value to their customers.

So yes, LLMs are taking jobs but they are also generating a new market that requires a human in the loop. As a side effect, higher skilled people are capturing a segment that those with much lower skills have lost for good. Feel free to extrapolate this concept forward.

Once we have established the value of LLMs, we can also understand why the focus for major companies has become so narrow. And you don’t have to go far. If you use any LLM on a regular basis, ask yourself, how much less have you been using Google? The answer for me is a qualitative: a lot less.

At the same time, as LLMs capture market share and reallocate resources elsewhere, companies are becoming less and less willing to share any research with the community. I “jokingly” - but it’s not a joke at all - call OpenAI… ClosedAI. Without sharing, the progress towards AGI has slowed down.

Discoveries in GenAI are expensive, so if “inexpensive” research requires global collaboration, how do we hope to advance without it in one of the most expensive research fields in existence? For comparison, the Large Hadron Collider in Geneva, one of largest experiments ever built by mankind, cost just a mere 5Bn$, almost the same amount Amazon invested in Anthropic. LLMs make high-energy physics look cheap.

But, as pointed out in the interview, the issue is that we are becoming aware of the limitation of LLMs and we notice them on a daily basis. Sure, GPT-4 is great at summarizing, horrible at other things due to alignment (like… being creative), and plainly inadequate at more advanced reasoning tasks. The answer is very likely not another LLM.

If intelligence involves the ability to adapt to new and unforeseen situations, memorization (broadly, what an LLM does) is simply recalling previously learned information. So larger LLMs mean larger memory machines but not better machines.

Chollet advocates for discrete program search and program synthesis as alternatives to deep learning to achieve AGI. While deep learning works well for pattern recognition and intuition, program synthesis is more suitable (well, in theory at least) for reasoning and planning.

If you’re wondering how deep learning differs from program synthesis, simply speaking, you have to remind yourself that deep learning fits a parametric curve to data. Program synthesis constructs graphs of logical operators to solve tasks. To put it in more familiar terms, Program synthesis is a sort of symbolic reasoning on steroids with the ability to generate functional programs. Please don’t chase me with a machete for dumbing it down this much.

AGI keeps looking more and more like a series of Lego blocks, each specialized in different high-level functions and yes, they will probably become part of a single model, and maybe we will be able to call it an LLM. But at that point, it won’t be an LLM any more than the Wright Flyer is an airplane compared to an F-35. Certainly, they both have wings, but the similarities end there.

If what has been said in the interview is accurate, to achieve AGI we need to reopen the field of AI to pre-2022 levels and invest in adjacent research topics, not only LLMs. We - both you and I - have a responsibility here. We can’t directly reopen AI research, but we definitely have a say in where and how we invest.

Nvidia Doing Too Well?

Here comes the R-rated part

Can a company do too well? It seems like a rhetorical question, but it’s really not.

A few hours ago Nvidia topped both Microsoft and Apple, becoming the most valuable company in the world, with a market cap of merely $3.3Tn. Aside from the fact that Jensen can now buy Sagittarius A*, the blackhole at the center of the Milky way, and rebrand it to Jensen Space Trash Can, some employees are starting to complain that senior employees are coasting while waiting for their shares to vest.

Nvidia’s culture is particularly appreciated, and Jensen is the most popular CEO among employees, according to a Blind survey. I know you want to know the least popular CEOs and I’ll gladly spoon-feed your curiosity: Erik Nordstrom (from… Nordstrom) and David Goeckeler (Western Digital), both earned 0%. That must hurt. A bit like when you go home and your dog 🐕 doesn’t come to say hi, that’s how you know your life has taken a dark turn. Anyway, let’s go back on topic.

Similar situations are not new, in fact Microsoft had the same issue at the end of the 20th century, but in that case a sudden and brutal self-correction (the dot com bubble to be clear) ended up fixing the issue when, in the span of a few months, the stock went from 34$ in March 2000 to 13$ in December of the same year.

But Jensen doesn’t seem worried, in fact he lives in a state of worry and I genuinely admire him (even more, if possible) because that takes a different level of humility.

"I don't wake up proud and confident, I wake up worried and concerned."

Jensen

Jensen knows that Nvidia’s business is very cyclical in nature and that the company flirted with bankruptcy more than once because of this. It’s not unthinkable that it might happen again and he acknowledges that, despite being today in a unique position of dominance.

He’s undoubtedly enjoying his incredible success, and he’s got more devoted fans than me to take care of, apparently.

I am still conflicted on one point: does he always wear the same 2 leather jackets, or like me he just buys in bulk (I buy polos and pants in multiples of 5, mostly to keep Terry confused) so it only looks like he’s always wearing the same things? I’ll ask him next time.

I believe that there is a fundamental different between Microsoft and Nvidia, net of their respective success. Microsoft was famous for their sales volumes and infamous for the quality of their products, we all remember with absolute terror Windows 95/98/ME/XP/Vista. Nvidia, on the other hand, manufactures products of the highest technological level and quality. They charge for them, but in a way “they’re worth every penny”. The culture in Nvidia, blamed by some, but without doubt the reason the company has the reputation and sense of pride that it deserves, shows that it’s possible to become great, and remain at the top, without developing the cut-throat attitude of certain other bigs.

One, or very probably more competitors, will eventually show up with enough strength to eat into Nvidia’s market, the cycle will repeat and the new employees will be grateful that the “(nearly) impossible to get fired” attitude was there in the first place.

Let Jensen enjoy his supermassive blackhole, he deserves it.

Bye for now. Hi Jensen! 👋 Please please put 64GB VRam on the 5090.