It was just 4 days ago that we discussed how inference speed plays a role in making AI sustainable in the long term, and a few hours ago, OpenAI announced GPT-4o, which we will forever dub: GPT-😮 for added drama.

I felt like a seer with the useless superpower of visions of AI developments 3 days in the future, that’s why you get this update!

O is for Omni

GPT-😮 is a version of GPT-4 with new capabilities, but is it? In the last update we briefly mentioned that the OpenAI Arena had a new im-a-good-gpt2-chatbot model available, and that good-gpt2-chatbot might have been a preview of this one. Or maybe there’s more than meets the eye? We’ll get to this in a moment.

In the world’s most boring demo, that can only be described as a bunch of geeks in a hoodie asking boring questions to an overexcited AI, or in other words a version of Her without Joaquin Phoenix’s moustache, you can get a taste of the model’s capabilities. Which I have listed here for brevity:

Speed

Alright. I’m being unnecessarily harsh, but without peeking under the hood, most of the announcement is really about speed, voice and reasoning capabilities.

In reality the major news is that the model is presented as fully multi-modal, or to quote Will Depui from OpenAI:

GPT-😮 is a natively multimodal token in, multimodal token out model.

To explain why this is important just think that, until today, audio conversations went through Whisper for speech-to-text, then to GPT-4 then back to another model (Tortoise? XTTS?) for text-to-speech. This pipeline works, but it’s slow and you can’t talk to an overexcited AI because, come the last stage of the pipeline, the model has no idea what intonation it should use anymore.

By having a single model handle speech-to-text + reasoning + audio generation, you basically get your overexcited AI girlfriend! The interaction feels more natural, and it really gets more exciting! Now you can probably see why Apple pre-empted this announcement with their own, yes, chances are Siri will soon become your favourite AI Girlfriend/Boyfriend/Companion/Submit/Whatever again after more than a decade of lukewarm co-living on the same device.

Now the GPT-😮 naming makes more sense, the omni is about available channels: vision, speech, text. The new GPT is also better at prompt adherence and variable binding seems to have been more or less solved.

Image of a person that looks like Joaquin Phoenix. In the scene Joaquin is shaking hands with his moustache and the moustache is on fire, like in the cover picture of Wish You Were Here, the scene has the same framing as that on the Pink Floyd's album.

The Quest for a Real-Time Assistants

The reason why Humane’s reviews have been… Unforgiving, to put it mildly? Was not just because the device and its subscription are expensive, but also because it runs hot, it’s difficult to use, slow and inaccurate, according to the reviewers (I didn’t have a chance to try it in person yet). It fell short of the promise of delivering a powerful AI assistant.

GPT-😮 aims at filling this gap nicely: it runs on a much more powerful device - your phone - compared to a pin, all of its activity is processed on cloud and it’s backed by one of the best AI models currently available to the public, that now happens to be (finally) fast enough to be used almost in real-time.

I’d love it if part of the work could be done on-device, and I am curious to see how (or if) this issue and that of privacy is going to be addressed by Apple, in any shape or form, or if we have to give up and assume OpenAI will get all our phone’s data anyway. But I digress.

Inference speed on capable models enable the creation of new interfaces that are accessible, convenient and finally flexible, especially as they start to get connected to more devices. Yes, they also open up the door to dystopian scenarios of AI controlled smart homes. Ever watched Margaux? No? Great, spare yourself the pain.

Keep in mind that similar results can also be achieved by non-multimodal models, creating a pipeline with models dedicated to each task, connected maybe by a router model. The advantage of having it all in a single model is that no context is lost, which today gives you a cheeky AI assistant, but IF ToM develops in large models, the assistant’s responses can become immediately more relevant to the context, intent and mood of the moment.

User: GPT-😮, I’m having a heart attack, call me an ambulance and tell me I’ll be fine

GPT voice: Yes, An Ambulance, you’ll do just fine

User: 🥺

As a side note, and in pure OpenAI spirit and best practices, Voice Mode is not available. Why wait to announce something you have, when you can announce it when you still don’t? It will be rolled out in “the coming weeks”, but you can play with GPT-😮 even on the Free tier.

To GPT-4 or not to GPT-4?

Is this a GPT-4 model? OpenAI says yes but… Can I say no? I don’t expect - and count me surprised if this doesn’t happen - OpenAI to publish absolutely anything about GPT-😮, but a few clues point at something that doesn’t smell like GPT-4.

GPT-4 is not natively multimodal

GPT-4 is slow

GPT-4 costs 2x more

There is no shortcut to speed, unless you parallelize the model on an even larger number of GPUs, but this sounds unlikely as it would raise costs. We discussed how we can use API price as a proxy for model cost in the last update, and with GPT-😮 costing 50% less than GPT-4, the chances of it being the same model are minimal.

While speed improvement is significant enough to point us at a different architecture, overall capabilities are rather similar, so what gives? Well it might be - and we are in pure speculation territory now - a checkpoint of the upcoming GPT-5 to be used for early model evaluation. Maybe chosen at a point where it slightly outperforms GPT-4 without giving away what’s coming with GPT-5?

It’s either that or they have made an in-between release post-GPT-4 and pre-GPT-5, but I can’t see why, unless that was a specific request for the Apple’s deal.

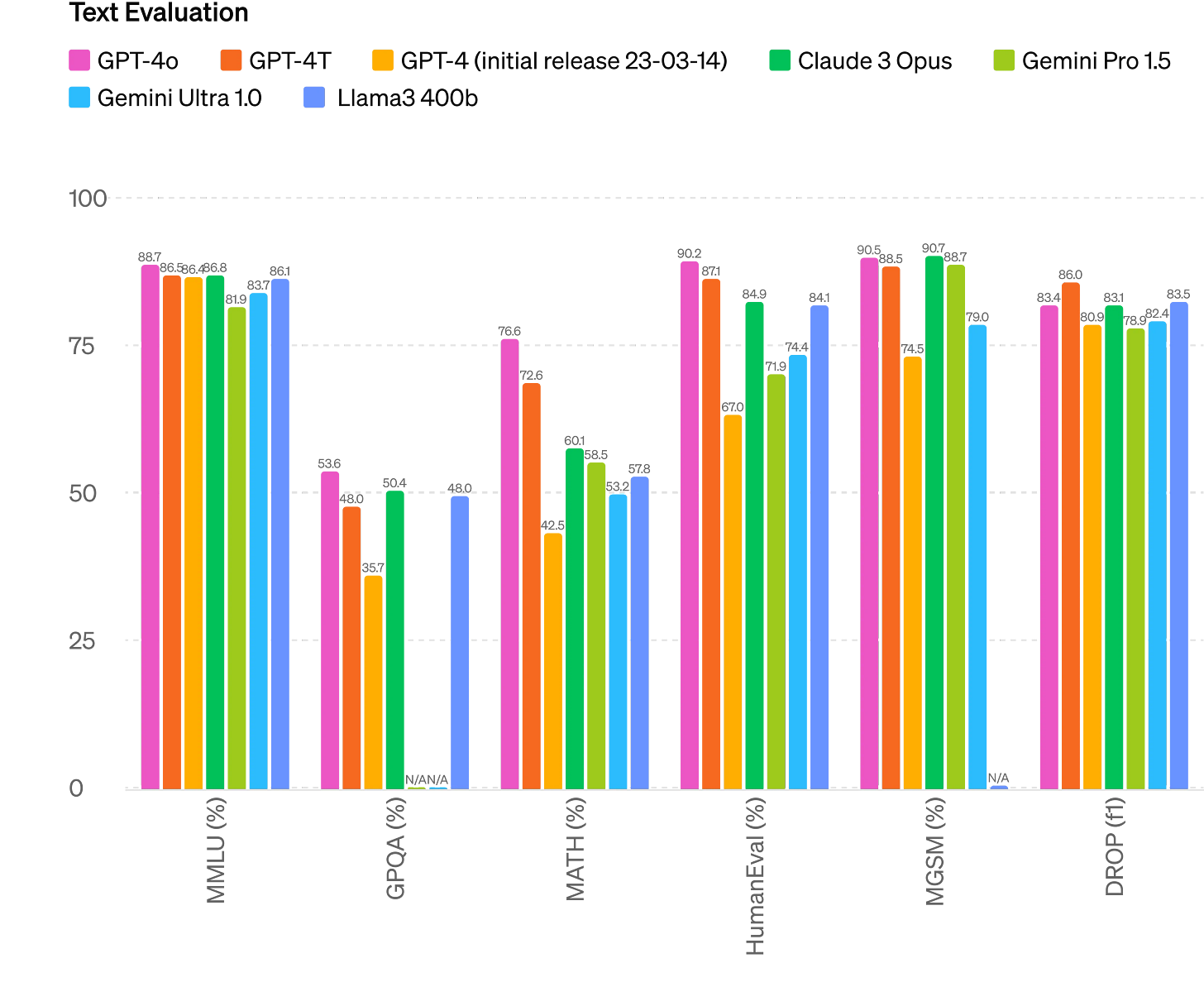

Whatever that is, we have some data and what’s interesting is that OpenAI is benchmarking against LLama3 400B that is still in training (scroll to the end of the article linked).

My money is on a different architecture. Also note how LLama3 400B is a top-performer even before it’s born! And Claude 3 holds its ground really well too. Where OpenAI is still unmatched is on vision tasks, the other models come close(-ish) but there’s still a meaningful gap.

Another point worth mentioning is that GPT-😮 has now much better performance on non-english languages, thanks to a new tokenizer.

What About OpenAI’s Edge?

Last week I mentioned that OpenAI’s edge might be slipping, not because they’re doing worse, but because competitors are getting better! This impression remains very valid even today, I caught an interesting conversation on X from Meta/FAIR about OpenAI’s capabilities:

The last tweet is very important, Armen refers to “enough knowledge” in the hands of the community, not Meta or a single company! If all of OpenAI’s edge today is just 2 months away from us commoners, then the impression that the edge is disappearing remains accurate.

In Conclusion

GPT-😮 is faster and much cheaper than GPT-4, it’s better than its predecessor at most tasks, even if not by much, but it’s an enabling technology for compelling (quasi) real-time assistants. You might argue that we could achieve the same with Llama 3, and the answer is yes except for the voice part. Not a small element in the context of an assistant.

The new architecture - this being my assumption - shows that capabilities are important, but inference speed is essential: we don’t need an AI with an IQ of 200 to find out if the restaurant we are planning to visit closes on Wednesdays, but we most certainly need it to be done in less than 5 minutes and for a negligible cost.

Better language performance means that those who don’t speak english will still have the ability to access an assistant, with benefits for both OpenAI and these customers.

Overall though, this feels more like an incremental - though very welcome - improvement than a breakthrough capable of keeping the competition at bay. And let’s take a moment to mourn the real MVP, our beloved GPT-3.5 that has been put to rest just today. Maybe that’s why GPT-😮 looks so shocked?

And that’s all for today, please go play with ChatGPT!