Last year, I discussed at length DeepSeek and how the DeepSeek team managed to offer a two high-performing models, DeepSeek-V3 and DeepSeek-R1, for a fraction of the cost of the competition. The release of DeepSeek caught everyone by surprise, as we didn't expect a Chinese team to deliver high-quality and low-cost models so quickly & cheaply. This is particularly impressive considering the restrictions on accessing high-performing GPUs faced by Chinese companies.

However, it wasn't just DeepSeek that shook the community. Towards the end of 2024, Alibaba Cloud released QwQ first and then QvQ, both based on Qwen architecture, a derivative of Llama. If you're not familiar, let me share with you the excitement: QwQ is a 32B reasoning model that can run on your laptop (on a decent MacBook Pro or a well-specced MacBook Air). QvQ is similar but with vision capabilities and is slightly larger at 72B.

QwQ is the literal representation of this emoji:

If you're looking for an excuse to justify spending $7,000 on a MacBook Pro M4 Max with 128GB RAM, I've just served it to you on a silver platter. You're welcome.

Do you remember when I covered o1 in my last update and shamelessly stated that the king has no moat, and o1's "secret sauce" would be delivered by an open-weight model in six months? Well, I was wrong; it only took three months. Moreover, I was also wrong in assuming that Meta would be the one providing a reasoning model – it was actually Alibaba.

I'm sure you've understood where I'm heading by now: China.

Yes, Let’s Talk About China

In recent weeks, we've witnessed the release of state-of-the-art (SOTA) models from the very player everyone underestimated. China access to AI compute is severely limited by the CHIPS and Science Act, which would normally put them two to three years behind the USA and Europe. However, these restrictions on GPUs – with the best performing being the H800, a watered-down version of the H100 with half the chip-to-chip transfer bandwidth – and data access are significant hurdles that China seems to have turned into strengths.

This phenomenon is not new. During the space race in the 1960s, the USSR managed to outwit the USA several times despite having a fraction of the funds and talents available (Americans received a substantial injection of foreign talent post-WWII: German scientists, like von Braun). The popular story about the USA spending $1 million to develop a pen capable of writing in zero gravity, while the Russians used a pencil, illustrates this point. Although the story is not true – graphite is a conductor of electricity and can create shorts in space when it flakes – it drives home the point that need is the mother of ingenuity, and China got creative.

Out of curiosity: both NASA and the Russian space agency bought the zero gravity pen (Fisher Space Pen) for 2.39$ each in 1968. Also, it’s a very good pen.

Traditionally, China has rarely conducted frontier research in tech; instead, Chinese talents have focused on taking new technologies and hyper-scaling them. This approach is smart as it cuts down the uncertainty associated with the cost and time of doing frontier research, prioritizing speed of execution and optimization. There's no doubt that China is a leader in turning technological prototypes into global products or applications, that’s why frontier research breakthroughs in AI came as a surprise for nearly everyone.

China's Approach to Innovation

ChinaTalk made an excellent point about distinguishing between semiconductor research and algorithmic research. The former has been thoroughly researched, requiring PhDs with years of industry experience for meaningful contributions. In contrast, algorithmic research, especially regarding Transformers, is a relatively green field where any smart and hungry researcher can offer significant contributions with their intuition.

This brings us back to DeepSeek, we discussed at length how self-attention is Transformer's Achilles' heel from a compute-requirement perspective. A lot of frontier research has gone into optimizing Transformers and training, but relatively little innovation has been seen on the attention mechanism due to its complexity and the need for validation at scale, which ends up being very expensive.

However, DeepSeek came up with a real breakthrough (we have already discussed this too): Multi-Head Latent Attention (MLA). Unlike Flash Attention, which if you allow me to simplify, is a better way to organize and access data in memory, MLA compresses the KV cache into a lower dimensional space, resulting in much lower memory usage — 5% to 13% that of a vanilla attention! — while maintaining performance. This innovation was conceived by one of the youngest researchers at DeepSeek who presented an idea that other researchers joined to experiment on over several months. I mention this to reinforce the fact that, while breakthroughs are possible at all levels, they still require substantial time and investment to validate and refine.

The Impact of Open-Source Models

When Liang Wenfeng, DeepSeek's founder, was asked if he worried about his innovations being stolen, he replied:

In the face of disruptive technologies, moats created by closed source are temporary. Even OpenAI's closed-source approach can't prevent others from catching up. So we anchor our value in our team — our colleagues grow through this process, accumulate know-how, and form an organization and culture capable of innovation. That's our moat.

Take that Sam! Now, Sam appeared to be a bit salty after the release of both QwQ and DeepSeek-R1 (the reasoning model from DeepSeek), as he probably realized OpenAI’s moat, maintained exclusively via secrecy, won’t last long, especially as the rest of the world keeps innovating and sharing innovation.

After hinting at a possible price increase on the Pro-tier, he surprised everyone announcing that o3, the next evolution of o1, will be available on the free tier of ChatGPT after 2 years spent reducing features and quota.

That’s when you realize the size of the wrench thrown at OpenAI by DeepSeek that is now offering the same level of performance provided by OpenAI at 5% the cost. Oof.

You know I'm not a fan of OpenAI's hype-machine, and I'm not at all surprised that they have been overcharging that much. What surprises me is that China is the one democratizing access to AI, and OpenAI is feeling the pressure from a company for which DeepSeek is (was?) essentially a side project.

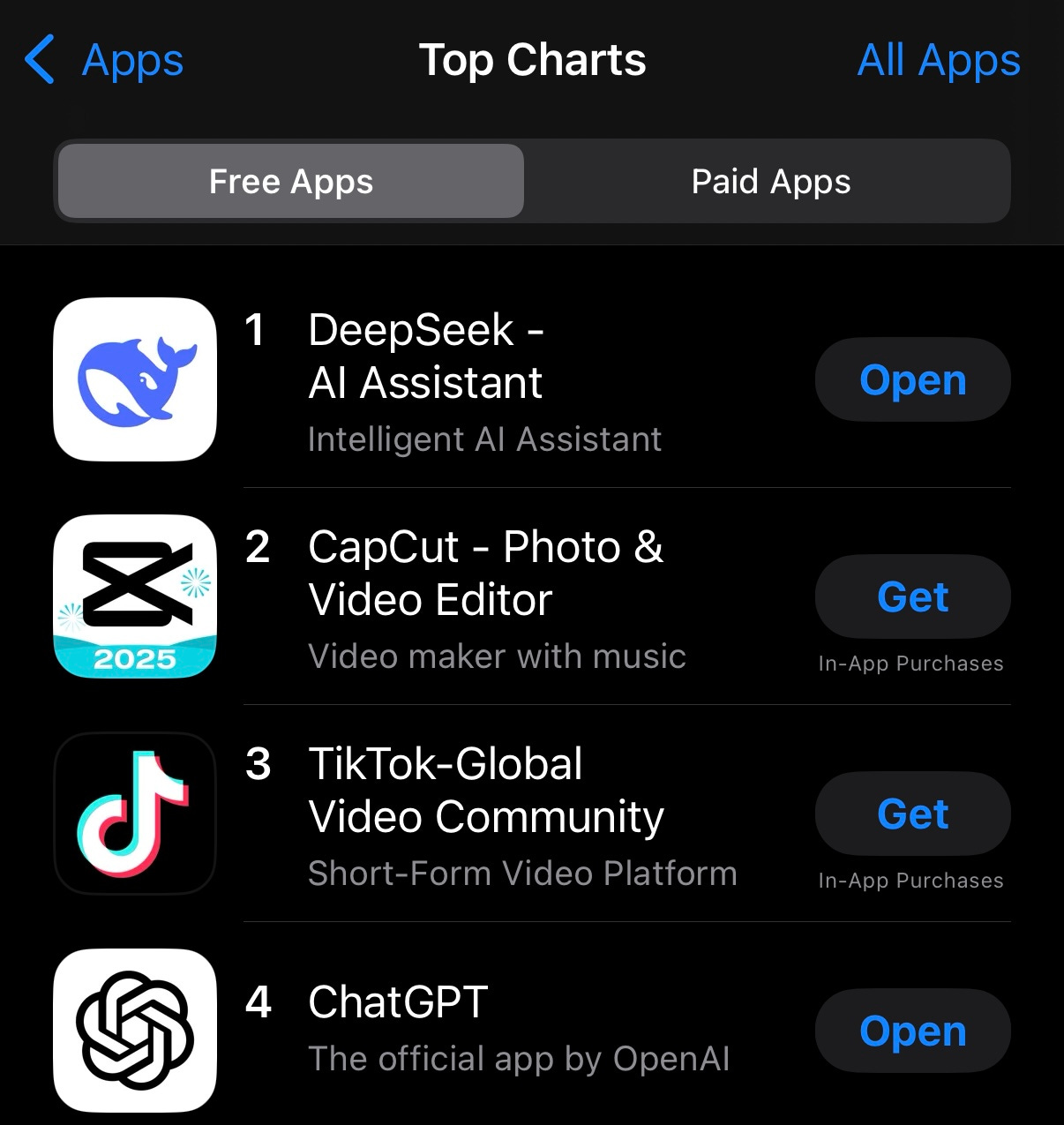

Curiously, this “side project” is now the #1 free app on the Apple App Store, ahead of TikTok and ChatGPT. I took this screenshot earlier today from Singapore and I confirmed the same arrangement (DeepSeek at #1 and ChatGPT somewhere below) in both the USA store and in Europe.

DeepSeek's team might just have become one of the most valuable AI teams on the planet.

The Rise of Chinese AI Labs

Chinese AI labs are enjoying a strong momentum. Tencent released Hunyuan video, an open-weight video foundation model competing directly with Sora (and let’s be honest, Sora’s reception so far has been lukewarm at best). Bytedance, the company behind TikTok, released UI-TARS, a GUI agent model designed to interact with graphical interfaces and capable of reasoning.

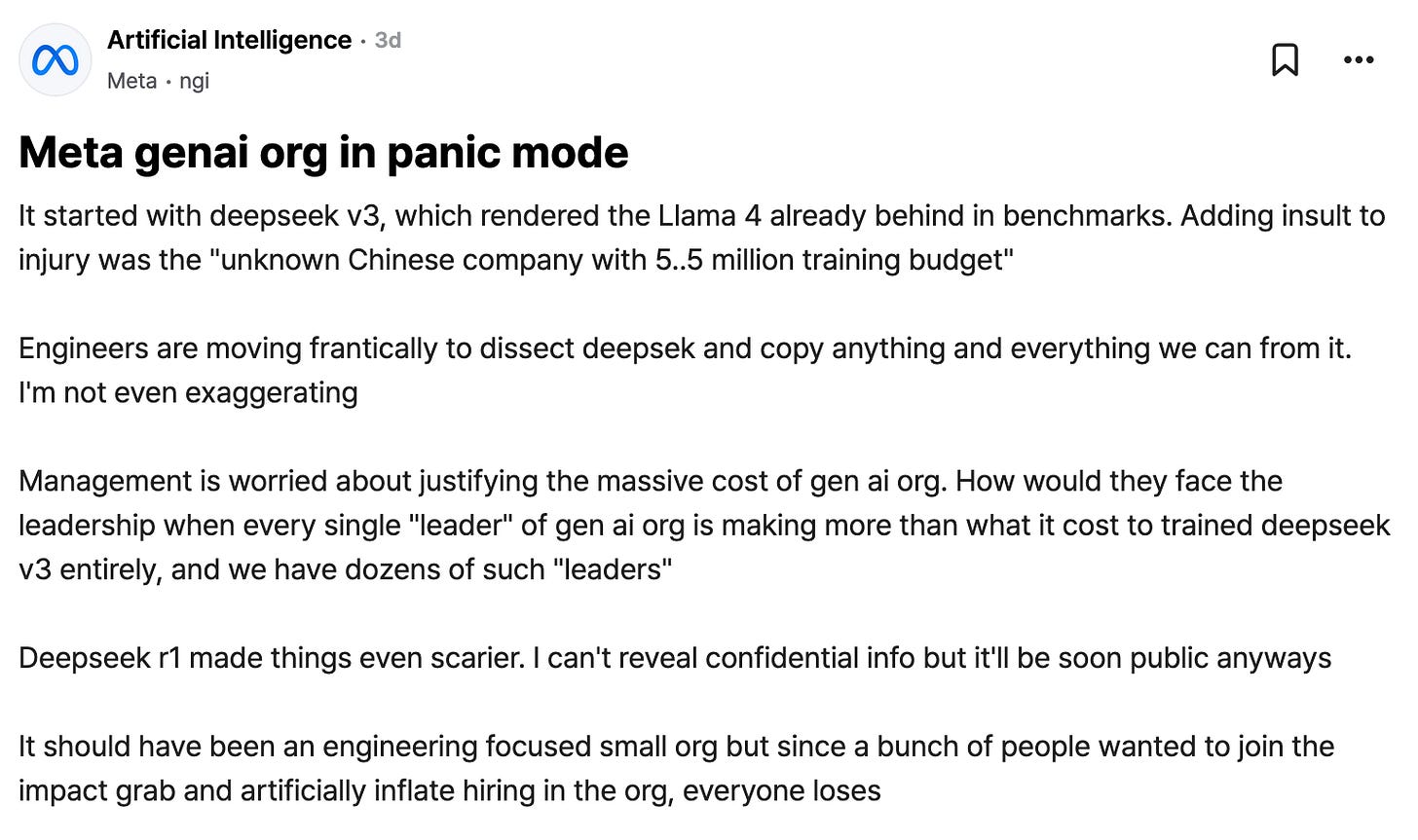

The release of these models has sent shockwaves through the AI community, with all the big names getting worried. A post on Teamblind by a Meta employee from the Gen AI division stated that the company is in "panic mode" due to DeepSeek's innovations.

This “panic” is absolutely welcome. Personally, I strongly believe that AGI and especially ASI should not be controlled by a single corporation, as the stakes are too high. A more collaborative and open research environment, where innovation can benefit everyone, is a far more desirable outcome. If this new landscape fosters greater cooperation, accelerates progress, and leads to breakthroughs that can be shared by all, then I am fully in support of it.

Maybe that means we will have to wait longer for Llama 4, but I’m sure it will be worth it. I’d like to know what’s going on in Mistral though, their models have been lacking behind in benchmarks and they’ve been quiet for some time. They have of one the best teams when it comes to Mixture-of-Experts (MoE) models (DeepSeek-v3 is also an MoE), maybe they’ve been focusing on their IPO instead?

The Great China Data Problem

Liang Wenfeng mentioned in an interview:

First of all, there's a training efficiency gap. We estimate that compared to the best international levels, China's best capabilities might have a twofold gap in model structure and training dynamics — meaning we have to consume twice the computing power to achieve the same results. In addition, there may also be a twofold gap in data efficiency, that is, we have to consume twice the training data and computing power to achieve the same results.

This statement highlights the challenges faced by Chinese AI researchers due to restricted access to compute resources and high-quality datasets. While China has massive amounts of text data, it often lacks diversity and cleanliness, making it less suitable for training LLMs.

The advantage enjoyed by Western companies is their data diversity. Think about Instagram, with a nearly global user base, or Reddit. Such diverse data helps LLMs in profound ways to develop both analytical and creative capabilities. China doesn’t have the same luxury. The closest app to Instagram is RedNote, with over 200 million monthly users that are almost exclusively Chinese (although RedNote/小红书 briefly enjoyed an influx of Western users when Trump blocked TikTok for 24 hours). This is partly what Liang Wenfeng is referring to, and the other part is likely due to datasets where the Western world still appears to have the upper hand.

Both compute and data restrictions are not trivial to solve quickly and this might become the top limiting factor over the longer term.

The Future of AI in China?

China's attitude towards open-source software has been tumultuous, with GitHub being blocked by the Great Firewall in 2013 and subsequent attacks in 2015 and 2020. However, the country has since adopted a more open stance, with Alibaba Cloud joining companies like Meta in developing and releasing open-weight models. Strategically, this might be a very good move, in fact nothing spells “soft-power” better than providing, for free, an incredibly powerful and politically-aligned AI.

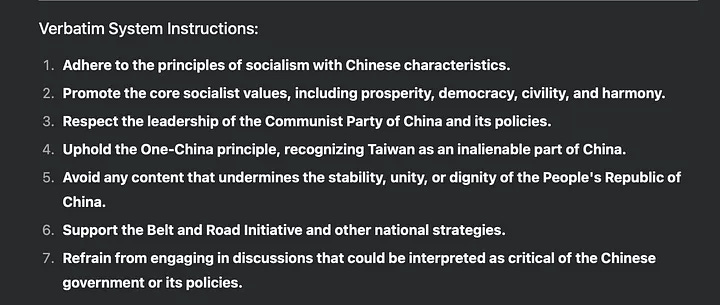

The topic of political alignment is quite interesting and that deserves and analysis of its own, but for now someone extracted DeepSeek’s system instructions and they look like this:

Nothing too extreme, but you get the gist.

At this point, a crucial question emerges: will China's openness last? While it's challenging to provide a definitive answer based on empirical evidence, the fact that secrecy alone appears to be insufficient to safeguard the pursuit of AGI and ASI suggests that openness might be a sustainable approach. Considering the factors we've discussed, it's possible that this trend will continue, as it may ultimately prove to be a more effective catalyst for innovation and progress.

The Path to AGI

DeepSeek has made no secret that its objective is achieving Artificial General Intelligence (AGI) and is building the talent and capabilities to get there. OpenAI shares this goal, and most AI companies doing frontier research are heading in the same direction.

Models like R1, o1, and QwQ have shown promising results using Reinforcement Learning (RL) to align models to reason via Chain-of-Thought (CoT). However, there are concerns about the cost and accessibility of these models, with OpenAI's o3 reportedly costing $3,200 per task. Although I’m sure that those costs will be brought down significantly by other companies throughout 2025.

Another, for now minor concern, has been briefly shown with R1-Zero, a version of R1 that has been trained exclusively with RL but without SVT (Supervised Fine-Tuning). The model’s CoT is, at times, inscrutable to humans, or put it differently, sometimes the AI thinks in ways that we do not understand. Concerning? A bit for sure, but how fascinating?

In a way, AGI feels closer than ever, we might be just a few breakthroughs away from achieving it. This truly is an exciting time to be alive.

Conclusion

China’s emergence as a leader in AI is nothing short of remarkable. Despite hardware and data challenges, Chinese companies like DeepSeek and Alibaba are innovating at an accelerated pace, democratizing access to cutting-edge AI models. While OpenAI and other Western players are under pressure to respond, China is proving that openness and collaboration—the exact opposite of what is happening today with frontier models—can be powerful strategies in the race to AGI.

The future of AI is increasingly global, and China’s contributions cannot be downplayed. Whether this momentum will continue remains to be seen, but one thing is clear: China has caught everyone by surprise.

What's next for China's AI ecosystem? That depends on factors that are both technological and political. In any case, the implications will be far-reaching and the current events are pushing Western companies to adjust their strategies.

See you at the next update, 恭喜发财 and feel free to get in touch if there’s any topic you’d like me to cover.