Last time I promised a more in-depth analysis of the inference chips market, and it's time to deliver on this promise. Today, we will discuss this along with LLama 3.1 and, of course, CrowdStrike.

Understanding the Market Split

At the risk of being redundant, let's agree on one fact: the AI market has split into two parts—training and inference. This will be our tenet for this analysis. If you disagree, you should generally reconsider your life choices up to this point, and move along.

The reason for this split is relatively straightforward and driven mostly by market and financial considerations, rather than pure technological needs. We could potentially run the entire AI world on GPUs, but in truth, we don't want to because:

GPUs are sold by a monopoly and priced accordingly.

GPUs are power hogs, making them expensive to run.

GPUs have gone through supply constraints.

GPUs do not perform too well at inference tasks.

If you have a dog, you’ll know how the dog wants to throw his favorite toy but also doesn’t want you to touch it:

That's how we feel about GPUs. We don’t want them, but we also want them because:

GPUs, although expensive, are still cheaper than ASICs.

GPUs are really flexible; you can play God of War as well as run LLama 3 on them.

The software stack is mature, documented, and widely supported.

As anticipated at the beginning, with one exception, all reasons for the “don’ts” are purely about CapEx and OpEx, with a strategic consideration: no one wants to rely on a single manufacturer for a critical piece of infrastructure.

Since companies are not charities, engineering preferences will take second place in the face of financial considerations, so the split happens.

Do You ASIC Much?

In the previous update, I made the following statement:

The general perception is that training happens once, but inference is a continuous process, and to an extent, this is true.

This is indeed the general perception, but it comes with a caveat, especially now that LLM research is in full-steam ahead. The reason I emphasized "to an extent" is because all major AI companies are likely utilizing their GPUs close to capacity. OpenAI is supposedly training GPT-5 (maybe Sora?), Anthropic is working on Claude-3.5-Opus, Meta has released today LLama 3.1 405B, and so on. Those GPUs are going brrrrr everywhere. But if all your GPUs are busy with training and running experiments, how do you serve models to the masses?

ASICs (Application-Specific Integrated Circuits) are custom chips designed to serve, as the name implies, a very specific purpose. Today they’re referred to as AI Chips, AI Accelerators, or Inference Accelerators. I'll use these terms interchangeably throughout the post.

Broadly speaking, in the world of AI, you have three main choices:

GPUs, which we discussed.

FPGAs, generally cheaper than GPUs and ASICs but less performant.

ASICs, expensive but much more performant than both FPGAs and GPUs.

If demand for a certain task is relatively low, going FPGA (Field Programmable Gate Arrays) is a good middle-ground. But if demand is high, then those FPGAs need to be converted to ASICs and manufactured en masse.

An FPGA is, in simple words, an integrated circuit that can be (re)programmed to perform all sorts of logic functions. On the contrary, you can’t generally reprogram an ASIC.

ASICs offer very high performance and consume the least power among all (measured on a per-token basis in the case of LLMs), but they’re also the most expensive. This is because, being so specific, they (still) lack the benefit of cost reduction offered by scale manufacturing. And this becomes an important issue.

You might be wondering how many companies are currently developing custom chips. The answer is: I don’t know exactly, but I found a range that goes from 40 to 190. This non-comprehensive list puts the number at 41, which is certainly an underestimation as many names I’m familiar with are missing.

The high number of custom-designed chips is the main factor constraining them from reaching a scale large enough to benefit from economies of scale. This is probably achievable by the top-5, but very unlikely for the top-30.

Achieving scale and reducing costs is a major concern for companies developing such ASICs, but it’s much less so for the likes of Amazon, Google, Meta, and Microsoft that are working with their own designs. The reason again is quite straightforward: those costs are absorbed relatively quickly by the sheer mass of users using those chips rather than their GPUs. Just think of meta.ai, by simply running on a more efficient platform they can save a very significant amount of money.

This is also the reason why companies like Groq offer their solutions as a Cloud service. You can still buy Groq cards at the tune of $20k each, roughly the cost of an Nvidia H100, with the difference that the demo Groq runs (with 568 chips) would set you back around $11M while you could serve the same model (albeit at a much lower throughput) for 1/10 of the cost using Nvidia.

You should get an idea that owning a custom inference infrastructure makes sense only if you can sustain high levels of utilization for extended periods, as in, you need traffic—lots of it and for long before the CapEx is amortized. But this is not the only bet made by inference accelerator companies.

Betting on Transformers

Besides scale and sustained utilization, you need to make another important and less obvious bet: Transformer-base architectures are here to stay.

While GPUs can be used for anything, ASICs cannot really be repurposed, unless certain trade-offs are made. We might all agree that Transformers are not going anywhere, at least for a little while, but design and manufacturing work on different timescales.

From design to tape-out, an ASIC can easily take up to 2 years. From breakthrough to adoption of a new architecture, it can take as little as 6 months. That is to say, all companies working on their own ASICs are betting heavily that Transformers—as we know them today—will be around for at least the next few years. This should be the case, but it’s a risk worth discussing.

The conviction you have about how much the general architecture will change, will also define the amount of flexibility (and thus, potential performance loss) you’ll want in your accelerator.

Inference can take place at two different levels: on a regular endpoint, such as your laptop or phone, and at a public/private cloud level. These workloads are handled quite differently. Local workloads are currently being managed quite well by Apple. If you own any Apple Silicon, it comes with an NPU (how Apple calls their own ASIC) that significantly accelerates ML workloads. NPUs have been on iPhones since version 8, so a large portion of the consumer market can already deploy AI workloads at different levels on regular devices.

The other half of the sky is much more fragmented. Google Pixel phones have mounted an EdgeTPU (Google’s version of Apple’s NPU) since the Pixel 6, but other brands are still waiting to benefit from custom chips. Fragmentation aside, phones are getting a boost in capabilities with dedicated silicon, and it shouldn’t be long until we see all the other brands catching up.

All ASICs on the market support Transformers in most flavors and definitely support common open models like LLama or Mistral, but what if there is a shift towards a different type of architecture? In that case, it’s back to the drawing board, as ASICs will need to be redesigned.

This is the risk that all such companies are currently taking. While there are no major shake-ups in sight, we have interesting architectures like Mamba that are not supported by Transformer-optimized designs. Some troubles might even come from Transformers themselves.

BitNet is still a Transformer architecture, but it’s not supported by Transformer-specific ASICs. Why is that a concern? BitNet has an interesting advantage over regular Transformers: it uses a fraction of the compute and memory to run. This is a very compelling argument. When using trinary weights—where weights can only take three values: -1, 0, 1—multiplications, which are the most compute-intensive part of the inference cycle in regular Transformers, are reduced to simple sign flips, where 1 becomes -1. You get the idea that such changes could eventually become appealing enough to justify the design of new ASICs. Also, alternative approaches might well come up in the next 2-5 years.

The risk of architectural changes is an impending threat to the ASICs market, simply because they can render custom chips quickly obsolete. Of course, there are ways to mitigate this, and many companies have chosen this path: reducing specialization. I’m referring to all accelerators that are programmable and flexible, like Groq. They are still specialized for certain operations, but can be reconfigured to remain flexible as architectures evolve. This approach works, but as with any other de-specialization, it comes inevitably at the cost of performance, and picking the right trade-off doesn’t sound simple.

The Software Stack

Another important issue with ASICs is the software stack. After all, you have probably written your project to run with CUDA, because all your tools have been written around it in one way or another. So how do you run it on a custom accelerator?

Virtually all companies I’ve spoken with told me two things:

We are writing the software stack.

We offer out-of-the-box support for LLama.

Both are fair points, and there is a common trend. Writing SDKs and the toolkit necessary to fully support your own custom chip is a difficult endeavor. More established companies like Graphcore and Cerebras know this well. In fact, hearing directly from their customers, it seems that their hardware is better than the software.

This surprises no one; just think that CUDA has been around for 17 years and—like a great Barolo—needed a full 10 years to mature, becoming today one of Nvidia’s most important moats, as we learned from the ZLUDA saga.

The software stack’s complexity must not be underestimated. When working directly with hardware, any hardware, you generally need a minimum set of components: a compiler, a driver, and a debugger. The latter is critical for adoption. Nvidia introduced the first debugger for CUDA back in 2012, while debugging OpenCL is still a low-key nightmare today.

Writing a compiler backend is also very difficult. This factor can be mitigated by using compute units based on ISAs (Instruction Set Architectures) that already have support for frameworks like LLVM—think RISC-V, for instance. In the absence of this, vendors have to develop their own custom backend for LLVM and roll with it.

The driver is probably the “easiest” of the lot and a necessity, together with a user-space library, to allow customers to interact with the hardware. There are no shortcuts here.

Assuming all parts are now in place, we have to think about interfaces, or basically the points of access between our application/model and the hardware itself. Vendors have to create their own APIs, and if they all differ, it leads to fragmentation, raises the bar for adoption, and introduces lock-in (which is a nice-to-have for vendors but not nice-at-all for customers). An important attempt to counter this trend has been initiated by Intel with oneAPI1, which is an open interface targeting compute units from GPUs to accelerators. There’s a lot to do, but the aim of oneAPI is to ensure a common interface across all AI hardware. The most important chip manufacturers are now behind the initiative: Intel, Google, ARM, Qualcomm, and Samsung. Notably missing are Nvidia and AMD, since the goal of the project is to compete with both CUDA and ROCm.

Equipped with a better—albeit high-level—understanding of what making a custom chip entails, let’s understand which factors might contribute to the success of one accelerator versus another.

Defining Success

As companies keep looking for viable AI business models, it becomes increasingly obvious that the umbilical cord to the GPU must be severed. Specialized hardware has clear advantages, but it comes with the cost of high CapEx, some flexibility, and a still-green software ecosystem. In short, compared to GPUs, the barrier to entry is high. Let's see how to lower it.

Not all accelerators are created equal. One way many ASICs cut corners compared to GPUs is by implementing math only at low precision. This is absolutely fine in AI, where FP16 and INT8/4 (and mixed-precision) are becoming the new normal. High precisions like FP32 and FP64 are useful when modeling nuclear reactions within a star—or a bomb—but for AI purposes, we are very happy with lower precision. So, we will assume that a buyer is on the market looking to support AI-specific workloads only.

As you might expect by now, it won’t be as easy as comparing numbers, or you wouldn’t be reading this (are you?). ASIC manufacturers tend to present their performance in terms of tokens per second, but this metric represents only the most favorable way of slicing the numbers since ASICs are, by their very nature, faster than GPUs at generating tokens. In the shipping industry, there is a convenient unit called TEU (twenty-foot equivalent unit) to measure how much volume a given container occupies. I wish there was a TEU (Token Equivalent Unit) for accelerator performance, but there is none. So, in the absence of that, we will have to get creative and consider all factors:

TCO: hardware costs, power, cooling, space in the datacenter

P/W/$: Performance per Watt per Dollar

Throughput per $

Inference/s/$: Inferences per second per dollar

Software stack maturity

Flexibility

One point immediately stands out: when dealing with GPUs, one must have a few key points in mind: 1) the number of requests to fulfill, 2) the size of the model in use (to decide on the VRAM requirements), and then the decision gravitates between owning the hardware or renting it via the cloud. It’s relatively clear-cut.

With ASICs, the situation is more complicated and requires a business to have much better insights into its own needs before making a decision. This leads me to the next point: unlike with GPUs, there might be enough different use cases to justify the success of multiple specialized players in the accelerators market.

Given the high cost of ASICs, as already mentioned, all providers are offering access to their hardware on a cloud platform. This is a very good move as they can front the cost of the hardware, amortize it over time, and customers can benefit from generating content at a much lower cost than renting a GPU to support the same workload. Of course, as long as moving your data off-site is not a concern—a privilege not all entities can enjoy.

Ultimately, assuming customers can correctly assess their "inference/s/$" metric on each platform, the decisive factor is how mature the software stack is and how easy it is to integrate (and iterate) on the provided infrastructure. Flexibility, while desirable—Groq, in particular, has chosen a path of flexibility at the cost of some performance to avoid having to redesign their chip at the next architectural change—is not strictly necessary if 1) engineers don’t need to tinker with the base model and 2) integration is easy enough that, should the need arise, a company can quickly switch from one provider to another.

The last factor is represented by hyperscalers. AWS is already offering Inferentia targeted at providing inference services for LLMs. It must be said that a CSP would probably have the upper hand when it comes to software and ecosystem. Amazon uses Trainium and Inferentia both internally and as a service to customers, so they have both capacity and strong incentives to offer a reliable and rich software stack. On this last point, I’m confident that most of the difference will come simply from pricing. Amazon is generally rather expensive, and price optimization for new vendors should be a very viable way of competing against the giants.

So to answer the question we had at the beginning: lowering the barrier to entry requires not only an attractive entry point, but also a mature software ecosystem and the easiest possible integration. If the last two criteria are satisfied, customers will have fewer concerns about obsolescence. They can de-risk their approach by purchasing cloud credits, instead of hardware, confident that they can switch relatively quickly if needed.

In Conclusion

Inference Accelerators might well become what makes GenAI workloads viable from a business perspective. We are still in the early days, and since such chips don’t yet enjoy the effects of economies of scale, they remain both very performant and very expensive.

Companies with a defined business model that relies on a highly supported set of AI models, that do not need to tinker with them (besides the usual fine-tuning), and that are unsure about the level of traffic to serve over a period of 2-3 years should probably save on CapEx, pick the easiest API to integrate, and rent the accelerators as a cloud service. This approach helps to de-risk most shortcomings from an immature software-ecosystem.

On the contrary, companies that expect large workloads for extended periods of time and/or cannot send their data off-site, might consider purchasing the hardware directly. Priority should be given to ASICs that adopt widely supported ISAs (yes, they will want a good debugger!) and offer enough flexibility—again, at the cost of some performance—to allow experimentation on a slightly diverse set of architectures for at least the next 3 years. In this specific case, software maturity is of paramount importance, while flexibility varies from case to case.

It’s difficult to speculate which of the existing vendors will succeed, and I won’t even try. Not because I don’t like to play with the future, but because the success of any player will come, almost exclusively, from innovations at the foundational AI level, which have nothing to do with how the hardware looks. If Transformers are really here to stay, the most efficient architecture will prevail. But if architectures change frequently, the most flexible vendor will come out victorious. And if we abandon Transformers altogether? Well then, as already mentioned, it’s back to the drawing board for everyone.

LLama 3.1 405B is Out!

I’ll keep this brief as this post is already quite long, and I would like to spend some time evaluating this new LLama. Also, I’m happy that my sophisticated model correctly predicted, back in April, the release of LLama 3.1 405B. Thank you, math!

As expected, the model is rather massive at 405B parameters, and it’s already available on meta.ai for testing. Along with the massive 405B, Meta also released updated versions of both the 8B and 70B models, and I’m frankly impressed by the boost in performance in just a few months.

While reasoning remains quite the same, as expected, tool use sees some impressive jumps. Ok, what about the big one?

GPT-3.5 has been utterly obliterated by a much smaller model, and that’s good news as it means efficiency per parameter is going up. The large model slightly beats GPT-4o and falls on par with Claude-3.5-Sonnet, which leaves me wondering what will happen when Claude-3.5-Opus is released, especially considering how well Sonnet is already doing at reasoning with its relatively modest size.

Enjoy the model on meta.ai for now. We’ll get back to it in the next update!

CrowdStrike, What the Heck?!

Edit 25/Jun: I just came across an analysis from @taviso on Twitter about the bug that caused the entire mess, for the sake of accuracy, it’s not a NULL-pointer dereference but and out-of-bounds-memory read. The metaphor used previously has been updated accordingly. Also, a more detailed analysis of the event has been published by CrowdStrike if you’re interested in a more technical read.

Unless you come from cybersecurity or your IT admin is overzealous, the first time you heard about CrowdStrike was probably on July 19th, because you either couldn’t fly somewhere or had to take a day off from work as your computer wasn’t working. Either case, it was a memorable day for you!

CrowdStrike is an EDR (Endpoint Detection and Response) platform, or software that monitors your computer for signs of attacks with the ability to track and eradicate them. Occasionally, the overzealous IT admin might block perfectly legitimate applications, but that’s more about your company’s policies than CrowdStrike itself.

To do all these things, you need to install an agent, which is software that constantly monitors your computer, acquires telemetry (connections your computer makes, applications started, what such applications are doing, and so on), and sends it back to CrowdStrike servers for analysis. Occasionally, CrowdStrike servers will talk to your computer to send data that allows the agent to find new kinds of attacks.

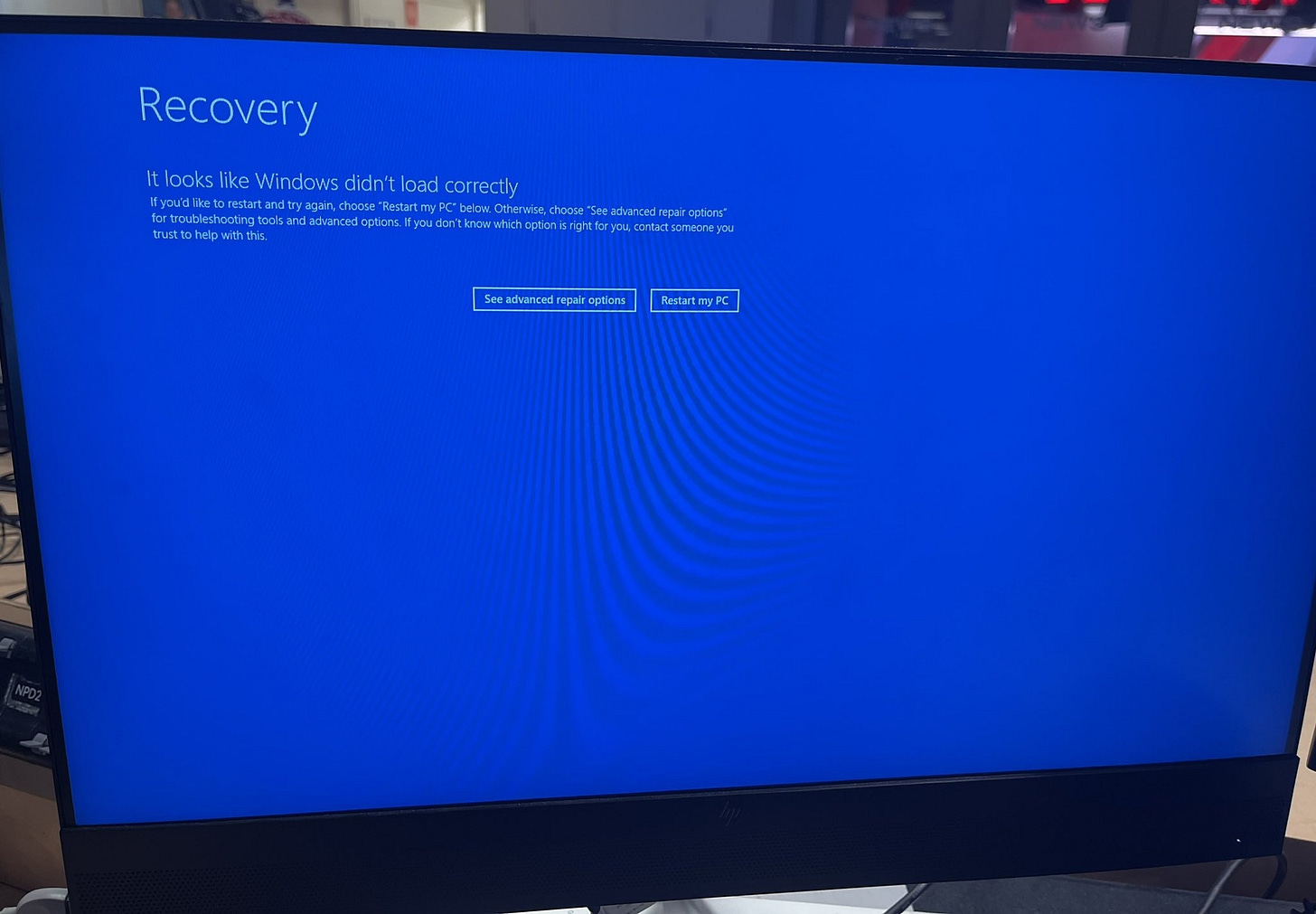

The agent is like a regular application, like Word, Chrome or Excel. But the agent does part of its job via something called a driver. A driver is a special application that is almighty—it runs with the highest privileges possible (in kernel space) and can do everything you can’t on your computer. With great power comes great responsibility, as Uncle Ben said, and this is very true for drivers. A mistake in kernel space, perhaps because of faulty code in the driver, doesn’t just crash an application; it crashes the entire computer with what is called a BSOD, or Blue Screen Of Death.

The problem is that if the driver automatically starts when you reboot the machine, you will end up in what I call a BSOD-loop. Not the position you want to find yourself in when you’re trying to print boarding passes, clear flights for takeoff, or undergo open-heart surgery.

Someone, for some reason, committed the cardinal sin of kernel-coding: dereferencing a NULL-pointer accessing invalid memory. If this sentence looks random to you, take this metaphor: Your God comes down to Earth and grants you all his powers, then goes to the next wall, drills a hole, and tells you: You can do anything you dream of, but for God’s sake, you shall never stick a finger look into the forbidden wall, or all hell will break loose. You, being the compliant human I’m sure you are, of course, don’t want to stick your finger into the hole in the wall; what do you expect to find aside from spiders or wasps? You use your newly found powers to create sheep as small as hamsters and hamsters as big as bears, eradicate mosquitoes and jellyfish, make all sharks vegetarian, and probably clone Margot Robbie a few hundred times until one version marries you. Well, that’s you, and you’re not a CrowdStrike driver developer, right?

They couldn’t resist the temptation of the forbidden wall, and all hell broke loose. They

dereferenced a NULL pointerlooked into the hole in the wall.

As you probably know, I was the CEO of ReaQta (now part of IBM), an EDR company. We used to regularly compete on deals with CrowdStrike and other EDR companies. ReaQta didn’t just use a driver; we used a full-on hypervisor, possibly the most delicate piece of software you can even dream of running on a computer. Imagine a driver on steroids—lots and lots of steroids, cocaine, and the occasional melee weapon.

We were, of course, terrified at the idea that our customers could suffer from a BSOD-loop. Now you know why. To make sure such an event could never occur, we extensively tested, often for weeks, all changes to the code of our hypervisor, even if the change was just a single line. Only then did we push the update to a select circle of sacrificial (of course, willing!) customers, and then to the general population.

Extensive QA made sure that we never encountered such an issue, so this raises an obvious question: How did something like that happen?

EDRs are delicate and necessary components. They’re now the foundation of the entire host-based security stack and, especially at the enterprise level, it’s unthinkable to do without them. This demands the utmost care when deploying updates to millions of devices. You cannot “test in production” at that scale (or any scale!), as it’s now clear why.

What I’m interested in seeing, as I expect lawsuits and settlements, is how the various liability waivers that are part of every software EULA will actually be enforced and to what extent. Of course, proving negligence here is difficult but certainly not impossible, and the effects of this event will create a precedent for future incidents of a similar nature.

You might remember the ransomware WannaCry in 2017, one of the most devastating global incidents since the Information Age began. WannaCry infected “only” 300,000 computers. CrowdStrike managed to crash at least 8.5 million computers.

All right, that’s enough for today, go and have fun, don’t stick your fingers in the wall!

There are other standards that are well-known, like OpenCL which is massive and in general complex to implement, oneAPI includes parts of both OpenCL and Vulkan (et al.) to make life a little easier for all vendors.